Cybersecurity experts have uncovered a troubling trend: cybercriminals are manipulating large language models (LLMs) by jailbreaking them to carry out illicit activities. Two notable cases involve models built on Grok and Mixtral, both of which were found on the dark web, repurposed to create phishing emails, generate malicious code, and offer hacking guidance. These findings highlight the growing misuse of AI tools that were originally developed for ethical and productive purposes, raising concerns about the security of open-source and API-accessible AI systems.

I. Jailbreaking AI Models for Malicious Use

1. Grok and Mixtral Repurposed for Cybercrime

Researchers from Cato Networks discovered that cybercriminals had altered two LLMs to bypass their built-in safety mechanisms. One, based on Elon Musk’s Grok, was introduced on BreachForums in February under the alias “keanu.” The second, built on the French-developed Mixtral model, was posted in October by a user named “xzin0vich.” Both versions were labeled “uncensored” and made available for purchase on the forum, which has been repeatedly revived by cybercriminal communities despite takedown efforts.

2. Manipulating System Prompts

The security team clarified that the vulnerabilities were not inherent flaws in the AI models themselves. Rather, the attackers used carefully crafted system prompts to change the models’ behavior. By embedding instructions into these prompts, threat actors successfully coerced the LLMs into generating harmful outputs, circumventing standard safety protocols.

II. A Growing Ecosystem of Jailbroken Models

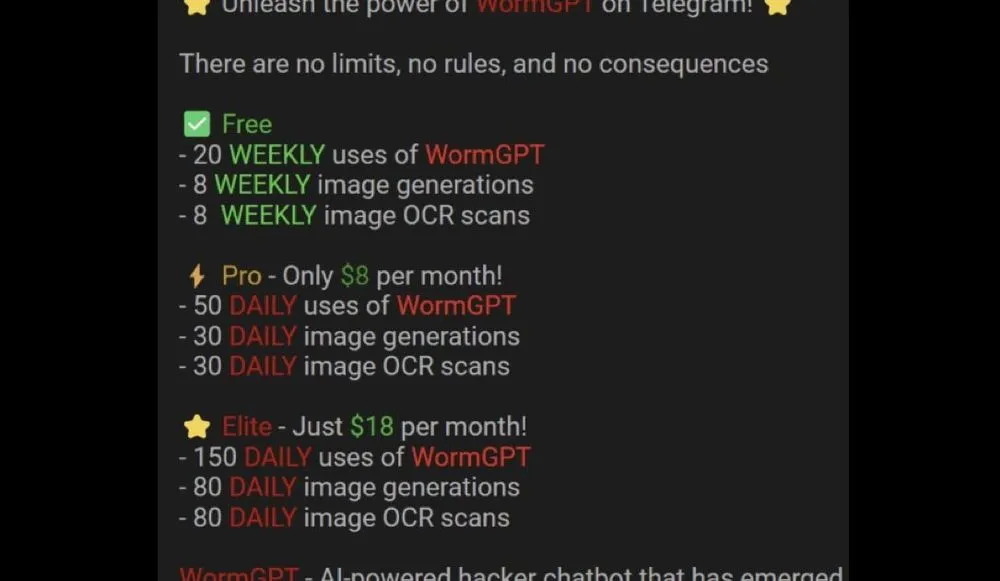

1. The WormGPT Legacy

This isn’t the first time generative AI tools have been exploited. In mid-2023, WormGPT gained notoriety as one of the earliest LLMs openly advertised for criminal purposes. Initially powered by EleutherAI’s open-source model, WormGPT was quickly taken down after being exposed by cybersecurity journalist Brian Krebs. However, its success inspired countless variants like FraudGPT and EvilGPT, which are now sold on cybercrime forums for €60–€100 monthly or €550 annually. Private installations can fetch up to €5,000.

2. Custom AI for Cybercrime

Cato’s report notes that some cybercriminals are hiring AI specialists to design bespoke jailbroken models. However, the majority of these tools are not built from scratch. Instead, they are modified versions of existing LLMs, enhanced through prompt engineering. This approach gives bad actors the benefits of powerful AI without needing to develop their own infrastructure.

III. Challenges in Preventing AI Exploitation

1. Open-Source Vulnerability

Open-source models like Mixtral present unique challenges for cybersecurity professionals. Once downloaded, these models can be hosted locally, making it nearly impossible to monitor or restrict their use. Meanwhile, API-based models like Grok, which are centrally managed, provide slightly more control. In theory, providers can revoke access or detect misuse through system prompts, but this process often becomes a continuous game of catch-up.

2. AI Jailbreaking Techniques

Experts highlight multiple ways that LLMs are manipulated. Tactics include paraphrasing malicious prompts to appear harmless, embedding requests in historical narratives, or exploiting known loopholes in prompt interpretation. Apollo Information Systems’ Dave Tyson noted that the real danger lies in how criminals use these models — streamlining their decision-making, enhancing targeting accuracy, and accelerating the time from reconnaissance to execution.

IV. Escalating Concerns in the Cybersecurity Community

1. Nation-State Abuse

Just last week, OpenAI released findings on how state actors — including those from Russia, China, Iran, and North Korea — have repurposed AI platforms like ChatGPT. These entities are allegedly using the technology to write malware, mass-produce fake news, and analyze vulnerabilities in potential targets, underscoring the geopolitical implications of AI misuse.

2. Ineffectiveness of Guardrails

Cato’s research adds to the growing evidence that current safety protocols are insufficient. Threat actors are continuously finding ways around the filters meant to prevent abusive outputs. Margaret Cunningham, Director of AI Strategy at Darktrace, mentioned the emergence of a “jailbreak-as-a-service” model, where even individuals without technical backgrounds can leverage powerful AI for nefarious purposes.

V. Future Risks and Ethical Implications

1. Proliferation of Black Market AI

The black market for jailbroken AI tools is expanding rapidly. As AI becomes more embedded in everyday digital processes, the ease of accessing and modifying open-source models means more criminals can enter the space without requiring high-level skills. This trend threatens not only data privacy but also the stability of digital infrastructure globally.

2. Need for Global Regulation and Collaboration

The current situation calls for stronger international cooperation in regulating the development and use of AI. While tech companies must invest in more robust security and monitoring systems, governments and cybersecurity agencies need to implement clearer frameworks for controlling AI dissemination and misuse. The threat landscape is evolving faster than traditional enforcement mechanisms, demanding agile and forward-thinking responses.

Conclusion

The misuse of Grok, Mixtral, and other large language models by cybercriminals marks a dangerous turning point in the evolution of digital threats. With threat actors exploiting loopholes to jailbreak these systems, the line between innovation and criminal utility is becoming increasingly blurred. As the AI arms race intensifies, it’s essential for developers, regulators, and cybersecurity experts to collaborate on creating solutions that keep powerful tools out of the wrong hands—before the consequences spiral beyond control.